By John Doe August 19, 2024

Summary: In this article, we will learn the basics of AI database agent, and try to create a simple database AI agent.

Table of Contents

Introduction

Generative AI is top of mind for many engineers. The questions of how it can be applied to solve business problems and boost productivity are still up in the air. There are various AI Agents, and they can interact with different systems and APIs. The AI Agents or a combination of those will heavily impact how we work with computers and systems today.

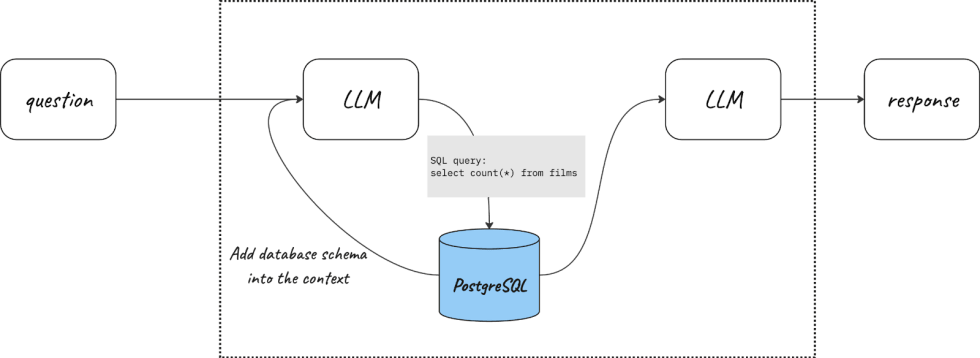

One of the examples that I want to look into today is a Database AI Agent. We may call it a text-to-SQL model that translates human-like requests into SQL language and produces human-like responses.

We will use a combination of open source tools:

- Langchain – An open source python library that offers developers a comprehensive set of resources to develop applications that run on Large Language Models (LLMs).

- PostgreSQL – We will use PostgreSQL to host the database with sample data. I will use Pagila, the famous Sakila example database ported to PostgreSQL. The structure of the database can be found here.

Setting up database

Get Pagila database SQLs from GitHub:

git clone https://github.com/devrimgunduz/pagila

Create the database:

CREATE DATABASE pagila;

Create the schema:

cat pagila/pagila-schema.sql | psql -U postgres -d pagila

Insert the data:

cat pagila/pagila-data.sql | psql -U postgres -d pagila

I will also create the user pagila to access the database from my scripts:

create user pagila with password 'SOMEPASSWORD';

\c pagila

GRANT ALL PRIVILEGES ON DATABASE pagila to pagila;

grant select on all tables in schema public to pagila;

Setting up Langchain

With Langchain, any LLM can be used. In our examples, we will use Google Gemini models, which require a key. You can also use OpenAI in the next iteration of the agent.

Start with installing the required Python packages. The usual recommendation is to use pip:

pip install --upgrade --quiet langchain langchain-google-genai pillow

A simple script, 0_langchain_gemini_test.py, asks Gemini, “What’s the weather in San Francisco?” You can set the GOOGLE_API_KEY variable, but if not, the script will ask for it.

import getpass

import os

from langchain_google_genai import ChatGoogleGenerativeAI

if "GOOGLE_API_KEY" not in os.environ:

os.environ["GOOGLE_API_KEY"] = getpass.getpass("Provide your Google API Key")

llm = ChatGoogleGenerativeAI(model="gemini-pro")

result = llm.invoke("What's the weather in San Francisco?")

print(result.content)

$ python3 0_langchain_gemini_test.py

Based on the search results, here is a summary of the current weather in San Francisco:

The weather in San Francisco is currently misty with a temperature of around 53°F (12°C). There is complete cloud cover and moderate winds from the northwest around 5-9 mph (9-14 km/h). Humidity is high at 88%. Visibility is around 3 miles (6.4 km).

The results provide an hourly forecast as well as current conditions from a couple different weather sources. Let me know if you need any additional details about the San Francisco weather!

...

It is working.

Query the database

We are done with the initial setup; let’s query the database.

Install the required libraries:

pip install --upgrade --quiet langchain-community psycopg2-binary

To experiment with querying the database we use 1_langchain_gemini_postgresql.py script. The script takes a user question in a human-readable format as an argument and produces the response.

To do that we will use the create_sql_agent function that constructs an agent specifically designed to interact with SQL databases. This agent combines a language model (like Gemini) with specialized tools that understand and manipulate SQL queries.

In addition to GOOGLE_API_KEY, we need to provide details about the database:

dbname="pagila"

username=os.environ["PG_USERNAME"]

password=os.environ["PG_PASSWORD"]

hostname="localhost"

port="5432"

Make sure that you set PG_USERNAME and PG_PASSWORD as environment variables.

Let’s start with the simple question:

$ python3 1_langchain_gemini_postgresql.py "How many films are there in the database?"

> Entering new SQL Agent Executor chain...

I should find the number of rows in the film table

Action: sql_db_query

Action Input: SELECT COUNT(*) FROM film[(1000,)]I now know the final answer

Final Answer: 1000

> Finished chain.

{'input': 'How many films are there in the database?', 'output': '1000'}

Without providing any additional context or explaining the structure of the database, I was able to get a proper answer. The way it works is the following:

But this works only for simple questions, where it is quite straightforward. For more complex questions, multiple requests are required for the database and LLMs, so-called chains. It is also quite interesting, that chains help to fix some errors in the query on the fly. Below, you can see how the text-to-sql script worked for two more questions:

- Which 5 actors have appeared in the most films?

- What are the most popular film categories? Show the top 3 with the number of films in that category.

The potential impact

Companies generate tons of data that they later use to make better business decisions. A perfectly structured BI system requires the skills of analysts and engineers. In this article, we saw how generative AI can help digest the data from rudimentary databases, but the expectation is that it will mature over time. There will be no need to learn SQL or understand how data is stored; AI will make sense of it automagically. This is going to impact how businesses store, manage, and process data.